The first problem of Deep Learning was training the hidden layers. The second problem was poor performance. The reason of first problem was vanishing gradient. And the reason of second problem was improper training and computation load. So, the difficulties Deep Learning faced were

Vanishing Gradient

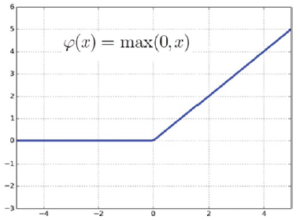

When the errors calculated at the output, cannot propagate through hidden layers it is called vanishing gradient. If the errors cannot travel backward, then hidden layers cannot be trained. The solution to this problem is ‘Rectified Linear Unit Function (ReLU)’ function. If we use ReLU function as the activation function of hidden layers, the errors can propagate back through the hidden layers properly. The following figure represents ReLU function-

Overfitting

Complicated models are more vulnerable to overfitting. When multiple layers are added to existing layer, it becomes more complicated. And thus it leads to overfitting. This problem can be solved using dropout. In dropout method certain percentage of nodes are randomly selected for training. Rest of the nodes are remained disconnected for that training phase. In the next training phase other nodes are selected randomly and in the same way rest of the nodes remained disconnected. In this way dropping 50% nodes of hidden layers and 25% nodes of output layers yield acceptable results.

Another way of preventing overfitting is regularization. In this approach architecture of the network is simplified. As a result the possibility of overfitting reduces.

Computational Load

Computational load increases the training time. The number of weights increases geometrically with the increase of number of hidden layers. If a network takes 6 days to be trained, in a month we can modify the network 6 times only. Which is a major issue. Using high performance hardware such as GPU instead of CPU and proper algorithm, such as batch normalization algorithm, we can reduce the training time.